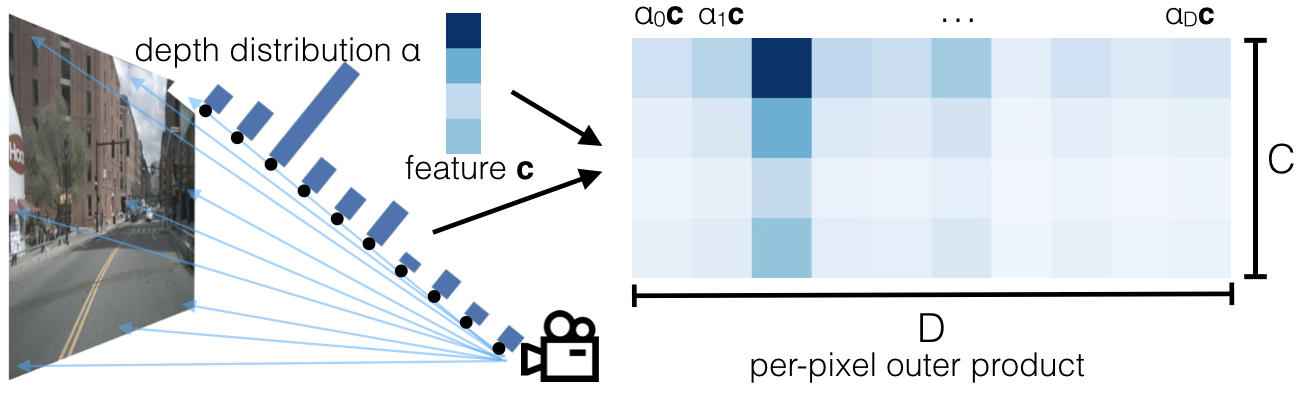

For each pixel, they predict a categorical distribution for bins where each bin has a step size of and a context vector (top left). Features at each point along the ray are determined by the outer product of and (right).

Note that this diagram shows a single pixel only. The pixel has a feature vector and a depth distribution given by . At the cell (0, 2) you can see the outer product between the depth distribution and the feature is the highest so this pixel is darkest.

Lift: Latent Depth Distribution

The following comes directly from the paper.

The first stage of our model operates on each image in the camera rig in isolation. The purpose of this stage is to lift each image from a local 2-dimensional coordinate system to a 3-dimensional frame that is shared across all cameras.

The challenge of monocular sensor fusion is that we require depth to transform into reference frame coordinates but the depth associated to each pixel is inherently ambiguous. Our proposed solution is to generate representations at all possible depths for each pixel.

Let be an image with extrinsics and intrinsics , and let be a pixel in the image with image coordinates . We associate points to each pixel where is a set of discrete depths, for instance defined by . Note that there are no learnable parameters in this transformation. We simply create a large point cloud for a given image of size . This structure is equivalent to what the multi-view synthesis community has called a multi-plane image except in our case the features in each plane are abstract vectors instead of values.

The context vector for each point in the point cloud is parameterized to match a notion of attention and discrete depth inference. At pixel , the network predicts a context and a distribution over depth for every pixel. The feature associated to point is then defined as the context vector for pixel scaled by :

Explanation of

The value comes from having points you are predicting and there being a step size of . Therefore, the points are separated by a total of deltas.

Note that if our network were to predict a one-hot vector for , context at the point would be non-zero exclusively for a single depth . If the network predicts a uniform distribution over depth, the network would predict the same representation for each point assigned to pixel independent of depth. Our network is therefore in theory capable of choosing between placing context from the image in a specific location of the bird’s-eye-view representation versus spreading the context across the entire ray of space, for instance if the depth is ambiguous.

In summary, ideally, we would like to generate a function for each image that can be queried at any spatial location and return a context vector. To take advantage of discrete convolutions, we choose to discretize space. For cameras, the volume of space visible to the camera corresponds to a frustum.